Recently the Meson Build System gained some momentum. It is time to stop that.

Not that Meson is a bad piece of software – on the contrary, it is quite well designed.

Still it makes building C/C++ applications worse, by (quoting xkcd) basically creating this:

It sets out to create a cross-platform, more readable and faster alternative to autotools. But there is already CMake that solves this.

You might say that CMake is ugly, but note that the CMake 2.x you might have tried is not the same CMake 3.x that is available today. Many patterns have improved and are now both more logical and more readable.

Nowadays the difference between Meson and CMake is just a matter of syntactic preference. The Meson authors seem to agree here.

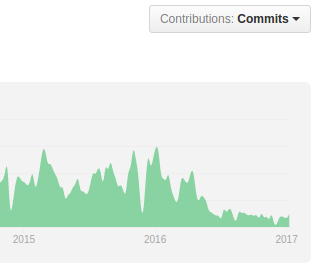

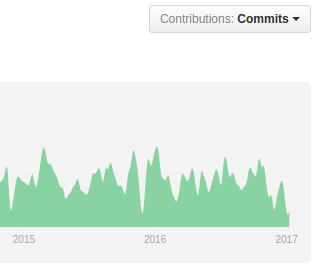

The actual criterion for selecting a build system however should be tooling support and community spread. CMake easily wins here:

After the introduction of the server mode it got native support by QtCreator, CLion, Android Studio (NDK) and even Microsofts Visual Studio. Native means that you do not have to generate any intermediate project files, but the CMakeLists.txt is used directly by the IDE.

On the community spread side we got e.g. KDE, OpenCV, zlib, libpng, freetype and as of recently Boost. These projects using CMake not only guarantees that you can easily use them, but that you can also include them in your build via add_subdirectory such that they become part of your project. This is especially useful if you are cross-compiling – for instance to a Raspberry Pi.

On the other hand, reinventing a wheel that is tailored to the needs of a specific community (Gnome), means that it will fall behind and eventually die. This is what is currently happening to the Vala language that had a similar birth to Meson.

The meson devs might object that Meson generates build files that run faster on a Raspberry Pi. However if your cross compiling is working you do not need that. And honestly, that particular improvement could have been also achieved by providing a patch to the CMake Ninja generator..

Addendum 27-11-2018

Stumbled over another great guide to modern CMake.

Addendum 15-06-2018

A new guide for CMake called CGold can be found here, which is of comparable quality to the Meson docs.

Addendum 4-1-2018

Some comments (rightfully) note that Meson has generally a better documentation and avoids some of its pitfalls. However this is mostly due to Meson not being around long enough such that the way you do things in Meson changed. Neither did it see such a widespread use like CMake yet. (think of corner-cases)

But even if you argue that this is precisely the point why you should use Meson, I would argue that improving the existing documentation in CMake and adding more educational warnings is easier then writing something from scratch.

Addendum 29-02-2019

Part of the perceived superiority of Meson, was that it just was not in use for long enough to notice its flaws – contrary to long lasting legacy of CMake.

With its adaptation, things like only one global namespace start to get attention – things that are already solved in CMake..